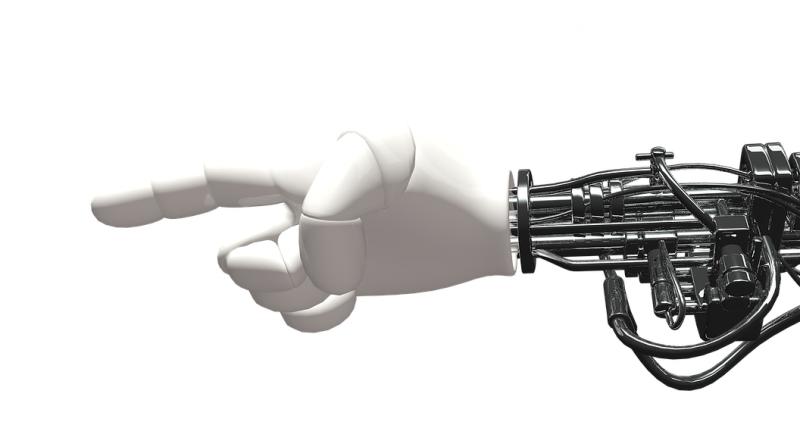

This AI driven robot can touch and feel

Humans can identify objects even if they are blindfolded because of other enhanced senses such as touch or smell. Bringing similar capabilities to robots, researchers have now integrated AI into a KUKA robot arm to help it identify objects.

Researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have come up with predictive artificial intelligence (AI) that can learn to see by touching and feel by seeing the official release notes.

From a dataset of more than 3 million visual/tactile-paired images of objects such as tools, household products, and fabrics, the model is able to imagine the feeling of touching to encode details about the objects and the environment. For instance, the robotic arm is fed tactile data on a shoe; it could produce an image and figure out the shape and material of contact position.